The Node.js Developer's Guide to Unix Domain Sockets: 50% Lower Latency Than TCP loopback

Links that I found interesting this week:

Shift Node.js to Annual Major Releases and Shorten LTS Duration

Bun 1.2.19 Adds Isolated Installations for Better Monorepo Support

Prisma 6.12.0 has been released with the new Prisma generator updates

ESLint prettier config with 30 million downloads gets hijacked

TLDR

Unix domain sockets deliver ~50% lower latency than TCP loopback for Node.js IPC. We got the 334µs latency for TCP loopback vs. 130µs Unix domain socket in our testing. Learn how to implement IPC using filesystem-based sockets instead of ports, and discover why tools like PM2 and PostgreSQL drivers use Unix sockets.

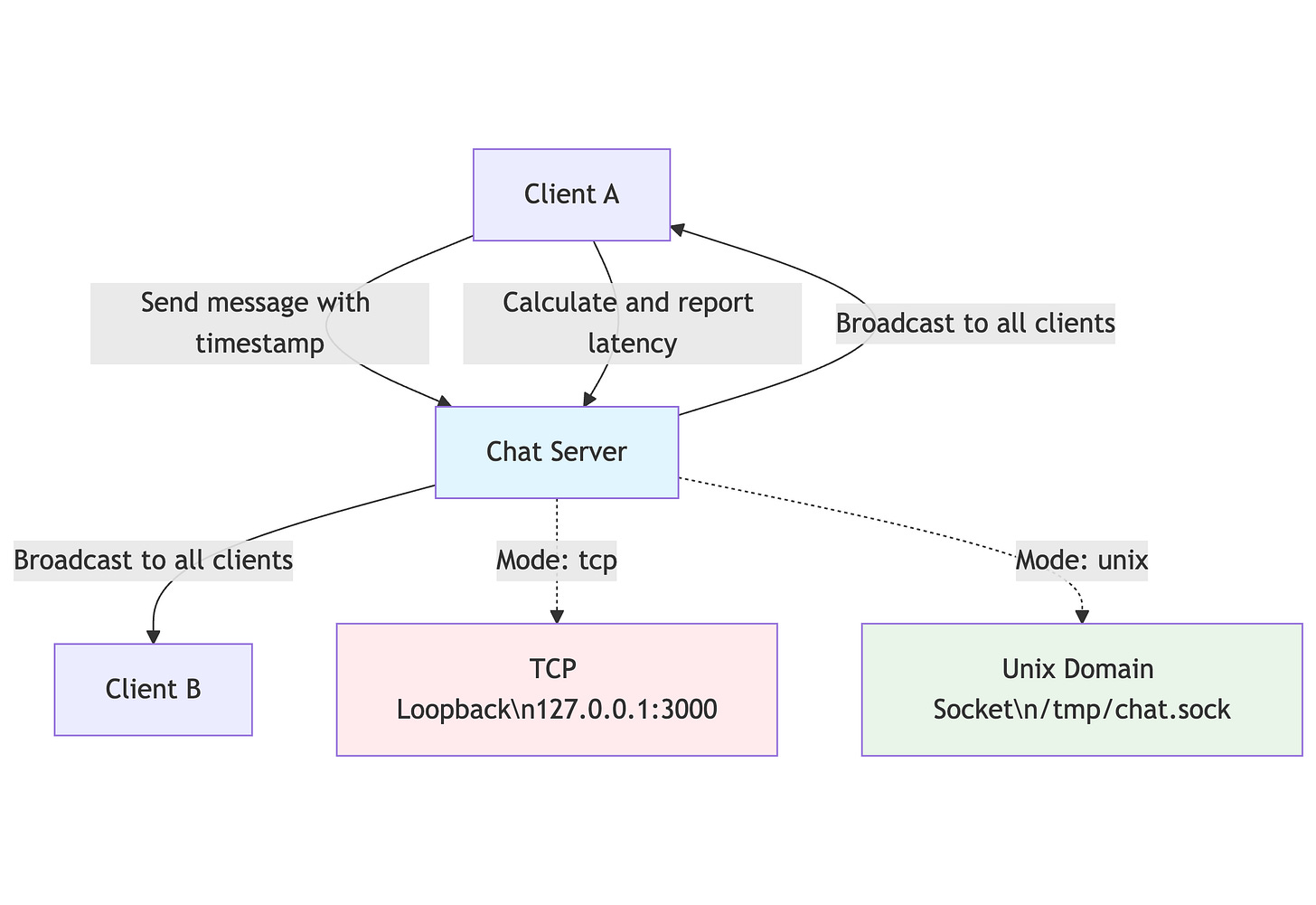

We'll build a dual-mode chat server that switches between both transport methods, understands when to choose Unix sockets over TCP, and has no port conflict issues in your local applications.

Difference between TCP loopback and Unix socket

When building IPC in Node.js apps, devs often have to choose between TCP sockets and Unix domain sockets. They both have similar purposes, but their underlying mechanisms and performance characteristics are different.

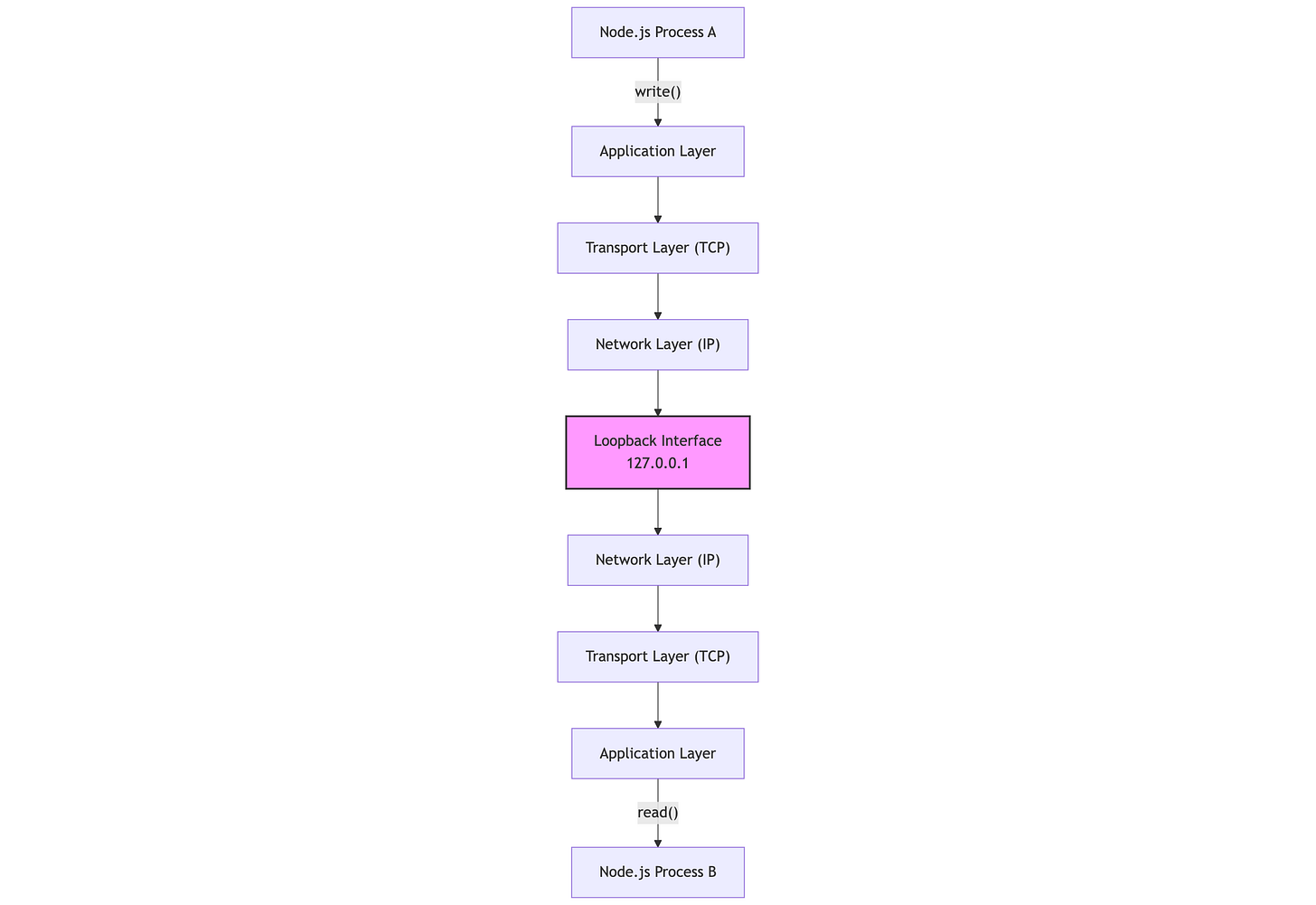

TCP Loopback: Going through the whole network stack

TCP loopback connections are a type of connection that uses a TCP socket. To make it work, TCP loopback traverses the whole network stack to reach the final destination—the Node.js process. Here is how data flows in a server created using TCP loopback:

That data goes through:

TCP handshake (SYN, SYN-ACK, ACK)

TCP windowing and flow control

IP packet routing (though simplified for loopback)

Kernel network buffer management

Context switches between user and kernel space

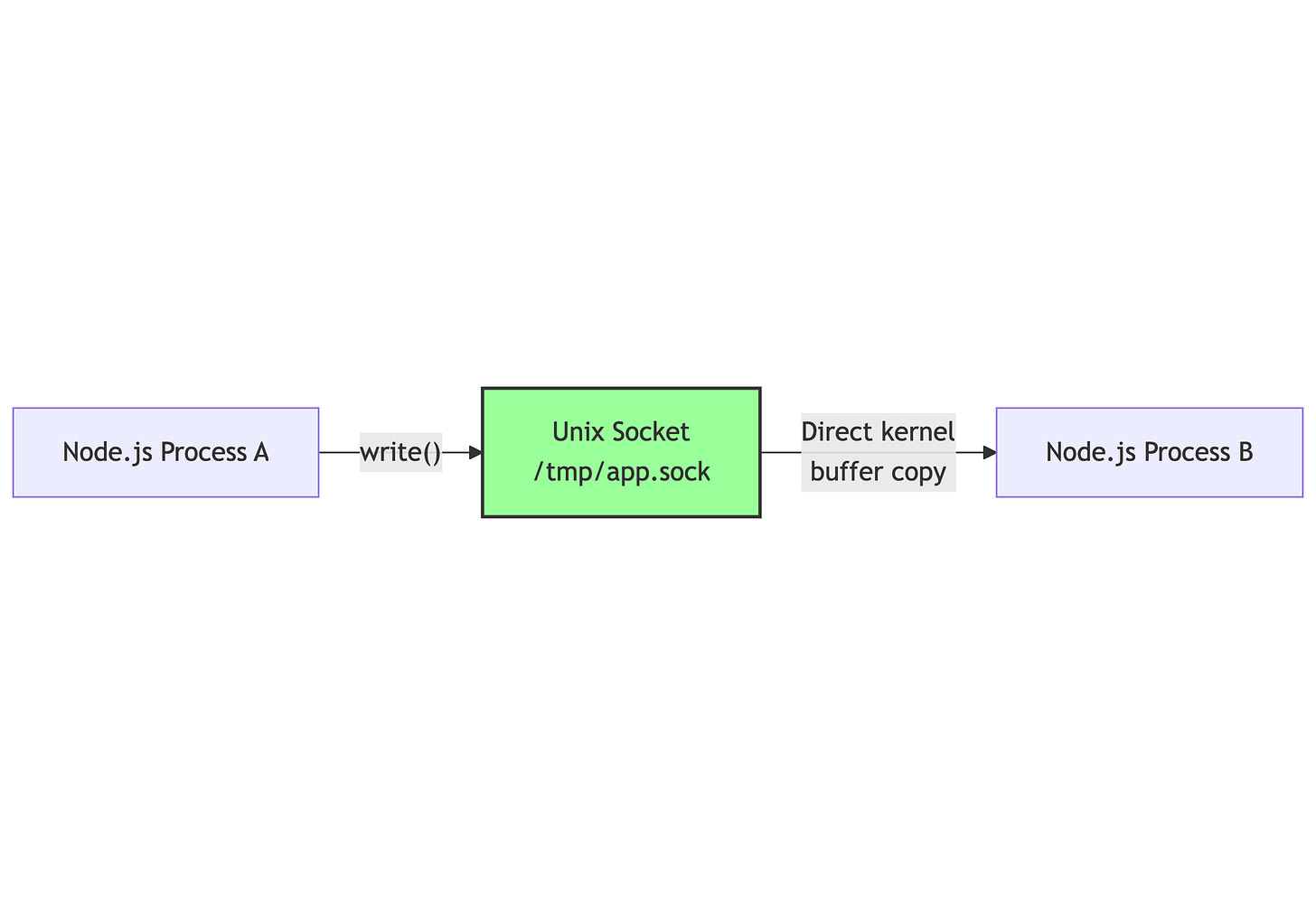

Unix Domain Sockets: The Fast Path

Unix domain sockets, on the other hand, provide a more direct communication channel:

Requests that are going through Unix sockets don't need to traverse the whole network stack; instead, they operate through the file system.

They are treated as special files (often with a .sock extension) and use the kernel's IPC mechanisms directly.

This results in:

No protocol overhead (no headers, checksums, or sequence numbers)

Single copy operation in kernel space

Reduced context switches

Lower CPU usage

Significantly lower latency compared to TCP loopback

Real-world libraries using Unix domain sockets

Libraries that you most likely use on a day-to-day basis provide a way to establish a connection through Unix sockets. Let's take a look at some of them.

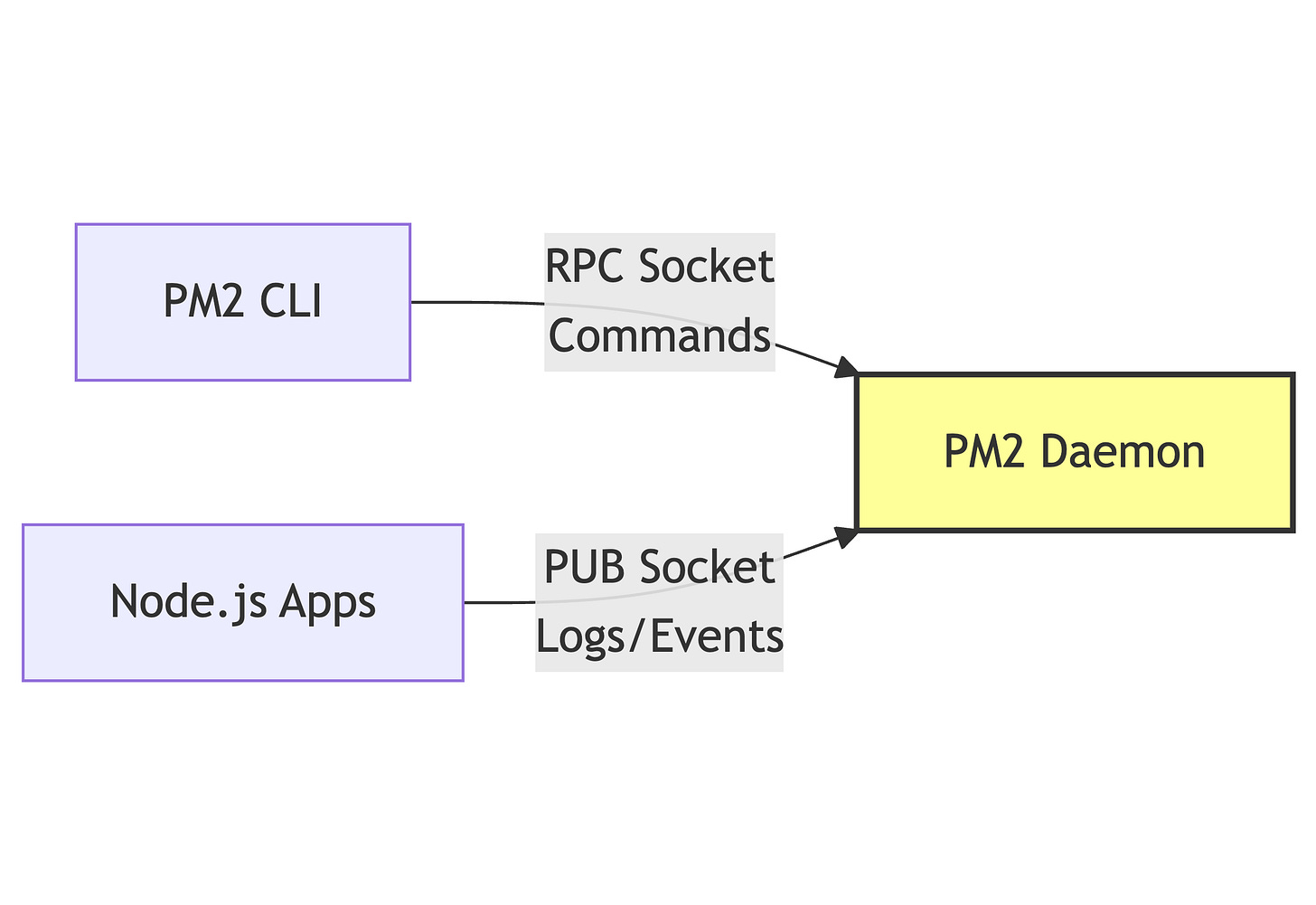

PM2

An obvious place where you can find the use of Unix sockets is in process managers that are specific to the current machine and are not intended for external communication. One such example is probably the most popular process manager in the Node.js ecosystem—PM2, with over 42,000 stars on GitHub at the time of writing.

There are two types of Unix sockets that we're going to talk about in this context:

RPC socket to interact with the daemon that runs processes.

PUB socket that plays the role of a pub-sub channel or IPC.

This architecture allows PM2 to:

Handle CLI commands through the RPC socket without affecting running processes

Stream logs and events from all managed processes through the PUB socket

Maintain low-latency communication between components

Avoid TCP port conflicts since everything uses file-based sockets

You can find more about how PM2 makes use of those sockets directly in the source code.

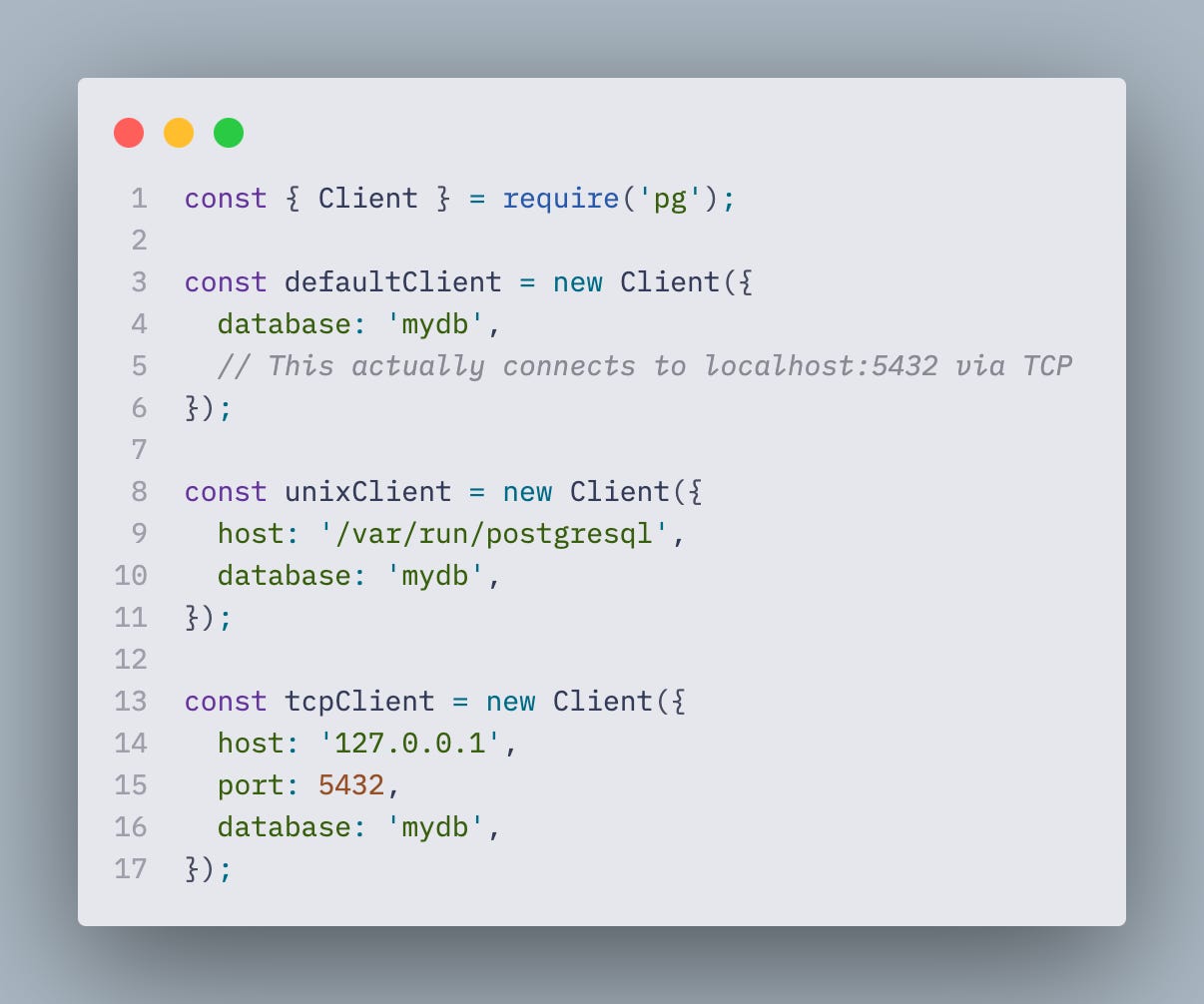

Postgres driver for Node.js

PostgreSQL doesn't require Unix socket usage, and the `pg` driver defaults to TCP connections even for localhost, which is basically a TCP loopback that we've talked about. To use Unix sockets, you must explicitly specify the socket path:

Building IPC chat with a Unix socket

Now to the fun part. We're going to build an IPC chat app for local processes to communicate using Node.js and a Unix domain socket.

After we build the chat, we're going to switch it from Unix domain socket to TCP loopback and see the difference, because based on our previous claims, we have to see the latency difference between those two. It is a good opportunity to validate our claims.

Project Overview

The app consists of the following parts:

Multi-client Chat Server: Accepts multiple concurrent connections and broadcasts messages between all connected clients

Benchmarking Clients: Connect to the server and send timestamped messages to measure round-trip latency

Dual Transport Mode: Single codebase that switches between TCP loopback (

127.0.0.1:3000) and Unix domain socket (/tmp/chat.sock) based on a command-line parameter

Building the server

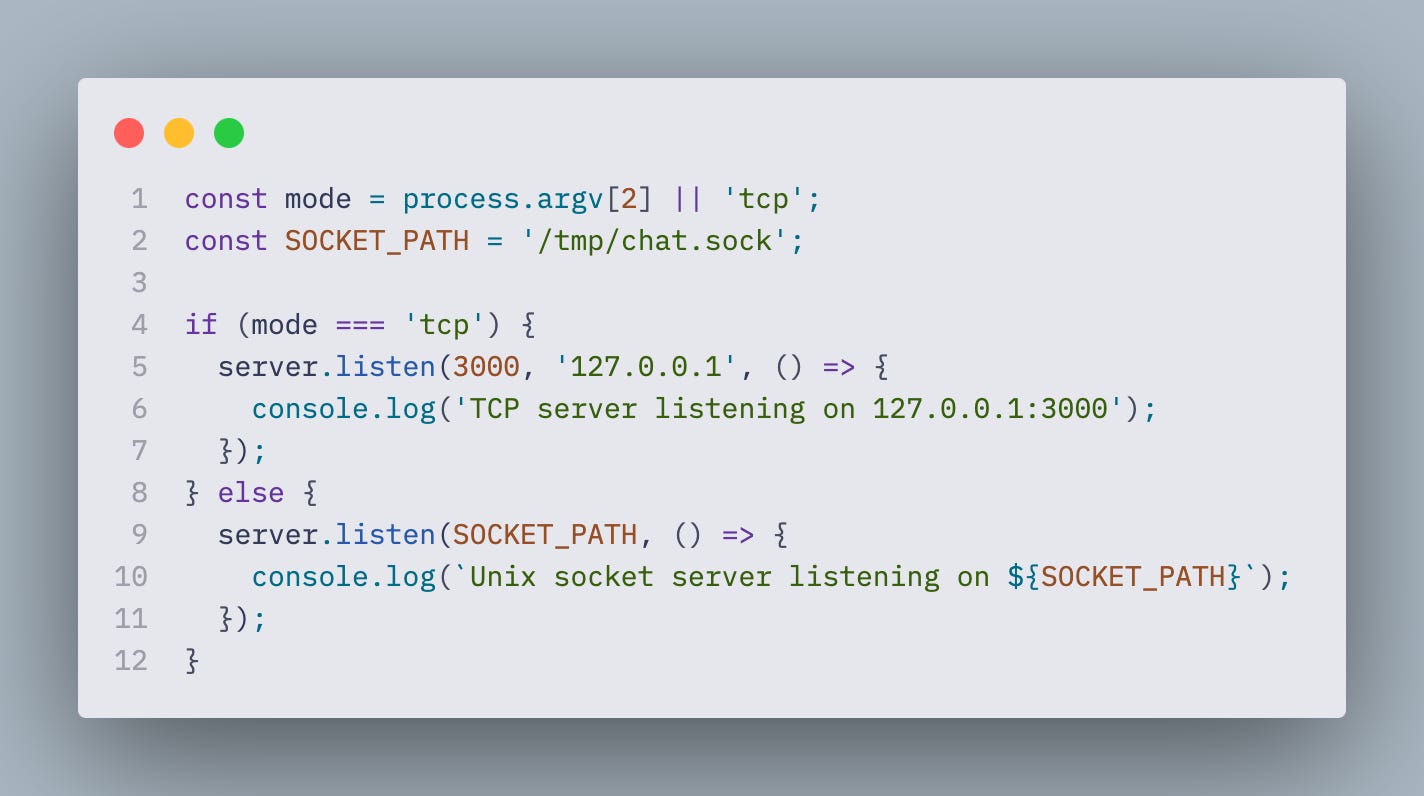

The chat server implementation handles both TCP and Unix socket connections. The main difference is in how the server is configured to accept incoming connections.

The server accepts a command-line argument to set the transport mode and configures the listener:

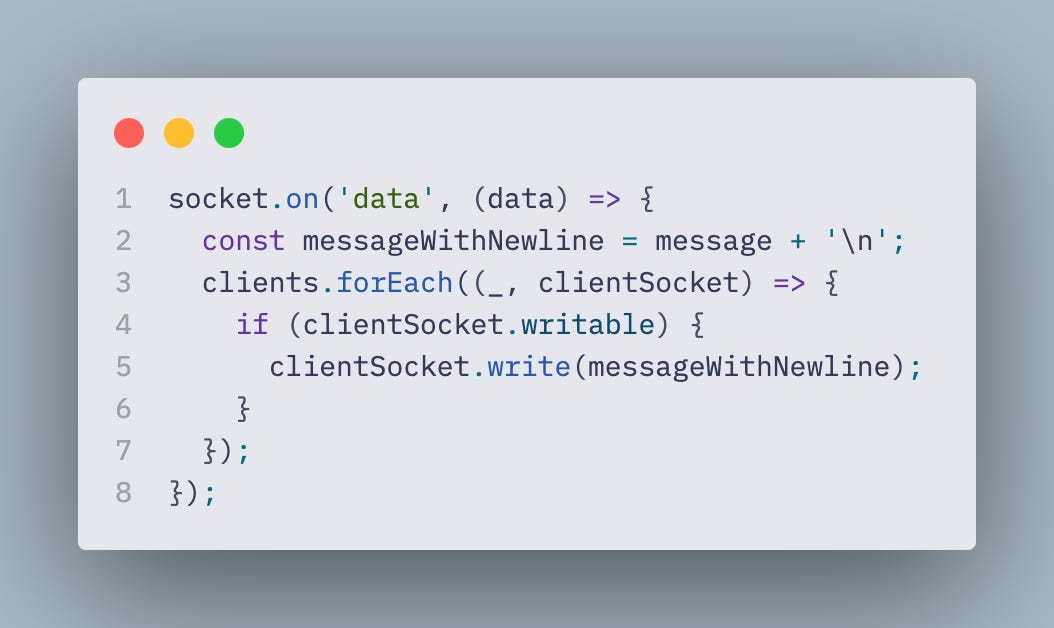

When a client sends a message, the server broadcasts it to all connected clients, creating the round-trip path needed for latency measurement:

The server also extracts latency data from JSON messages and aggregates performance statistics, reporting them every 5 seconds to provide real-time performance metrics during benchmarking.

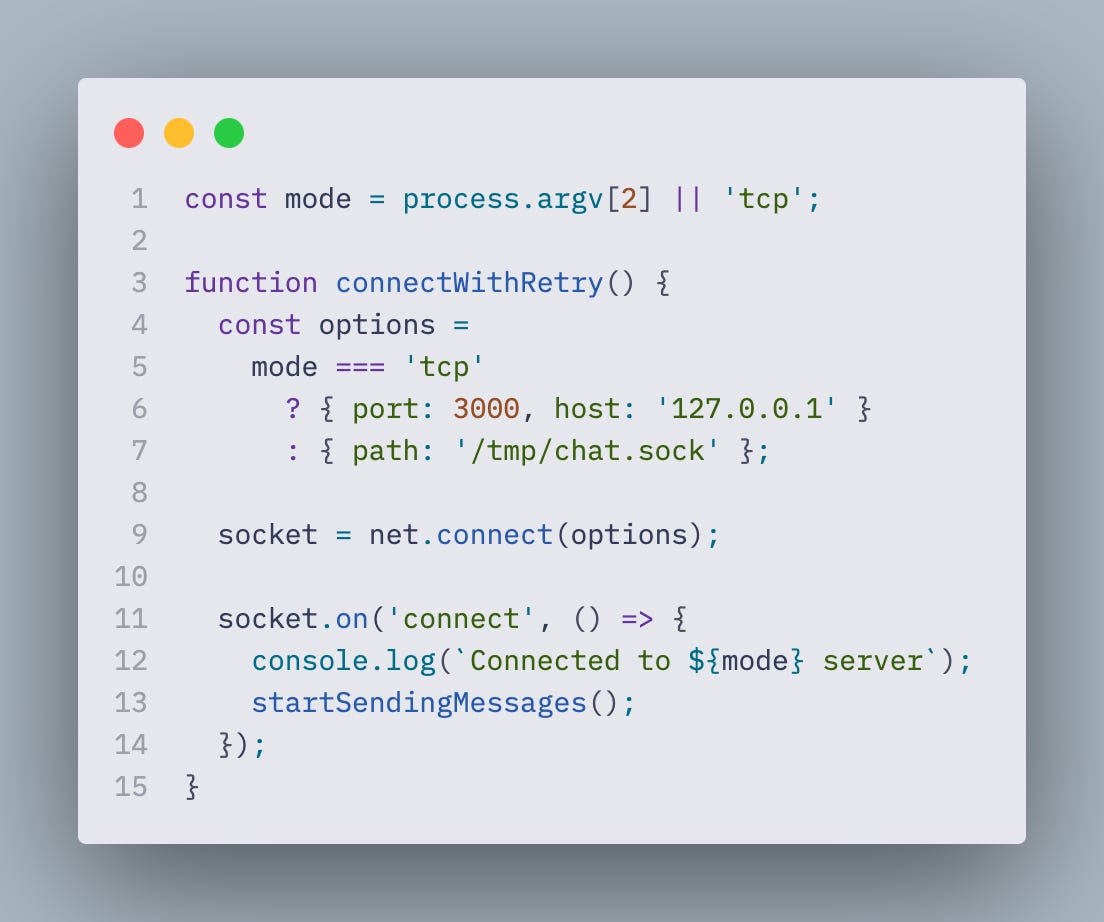

Building the client

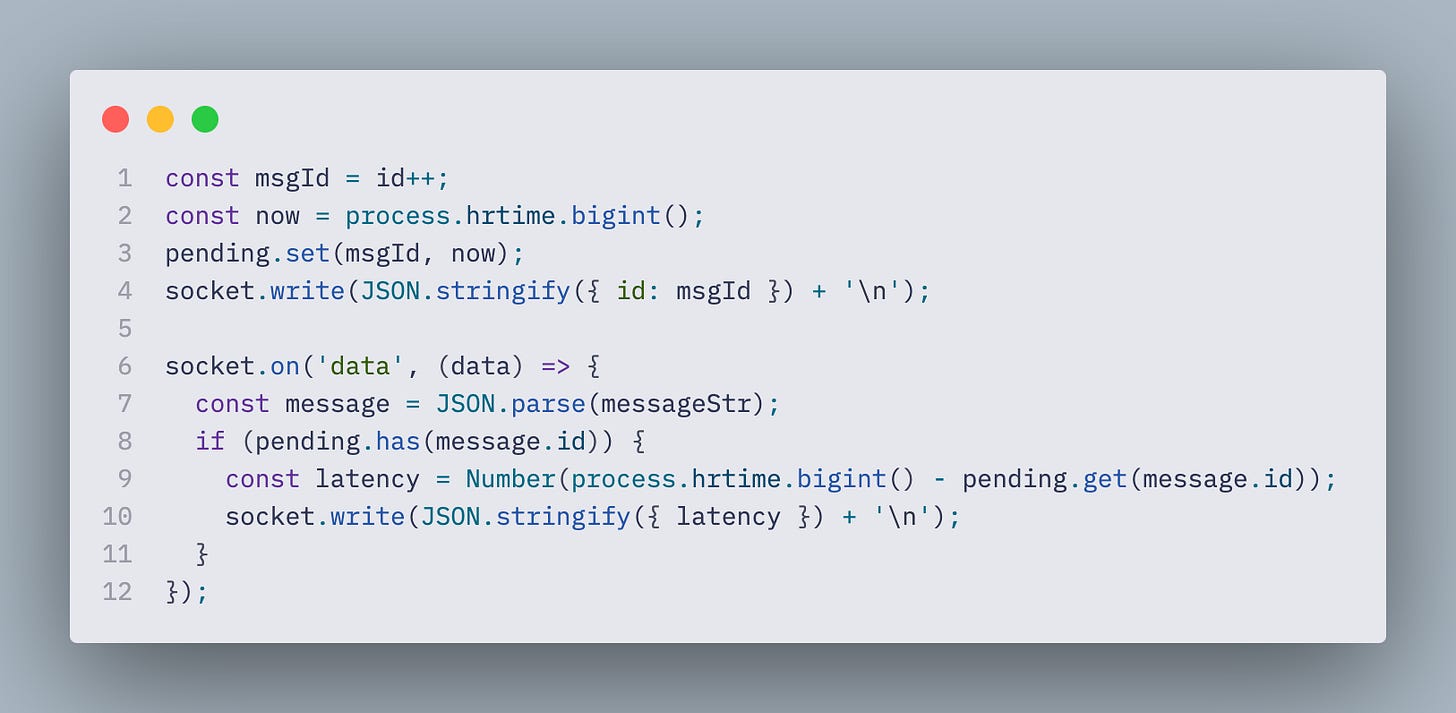

The client implementation is similar to the server's dual-mode approach, connecting to either TCP or Unix socket endpoints based on the command-line parameter. The important part lies in the high-precision latency measurement.

The client uses Node.js's process.hrtime.bigint() for nanosecond-precision timing to measure round-trip latency:

Testing and measuring performance differences

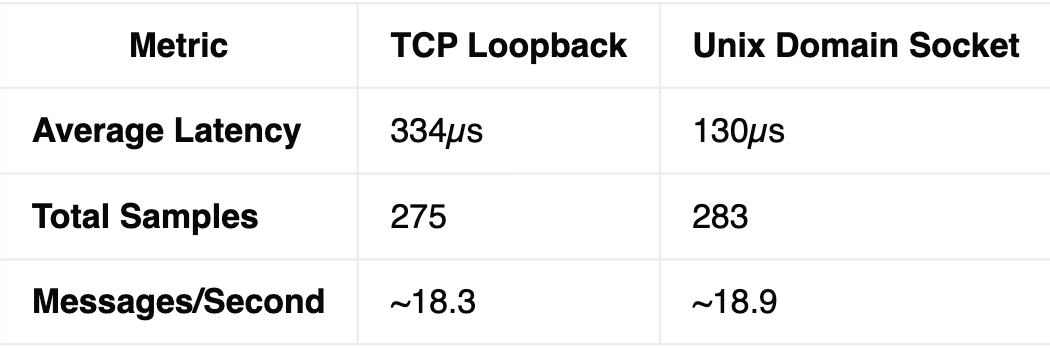

We ran both transport modes through identical 15-second benchmark tests using two concurrent clients. Each client sent timestamped messages and measured round-trip latency.

Benchmark Configuration:

Test Duration: 15 seconds per transport mode

Concurrent Clients: 2 clients per server

Message Frequency: 1 message every 10ms per client

Latency Measurement: Nanosecond precision using `process.hrtime.bigint()`

Raw Performance Results:

Complete Test Output:

TCP Stats: avg=334µs, samples=275

UNIX Stats: avg=130µs, samples=283P.S. If you're interested in the exact code and want to test things for yourself, you can get the repository with full server, client, and bash script code.

Wrap up

Now you see that there is more to a Node.js server than a simple TCP connection.

TCP can be used for local IPC, but there is a more efficient method of doing so using a Unix domain socket.

Another major benefit of using a Unix socket is that you don't have to think about occupied ports. You can run your Node.js app, and it will work.

On the contrary, running apps via TCP loopback requires you to specify a port where they are going to run, and you can use the same port for more than 1 running server.

If you're building an app and use IPC, the performance is of the most importance to you, or you don't want to deal with port conflicts using Unix sockets, might be a good way to go.