The 500x performance gap between Node.js version managers (and why you might not care)

Links that I found useful this week:

Satisfies in TypeScript - Adam Rackis dives into the nitty-gritty details of what the

satisfieskeyword is in TypeScript, how it works, and when you should probably use it.Node.js 18 LTS EOL extended from April 2025 to May 2032 on Ubuntu - Node.js 18 support on Ubuntu gets extended from April 2025 to May 2032. If you haven't migrated yet, it is a good reminder to do so.

AWS Weekly Roundup - I recently found out that AWS has its podcast/weekly update, where they cover the latest changes in their services. If you're building on the platform, it is a good idea to stay updated with the changes.

TLDR

Your choice of Node.js version manager can make your shell startup 500x slower. Our benchmarks show NVM taking up to 508ms to initialize in a fresh Zsh shell, while Volta manages the same in 1ms. But here's the catch: if you work in long-lived shells, you'll barely notice it. The massive performance gap only shows up when you're constantly spawning new shells—think CI pipelines, IDE terminals, or heavy terminal multiplexer usage. For most developers, a version manager's features matter more than just raw speed.

How we tested

I built a benchmark suite testing three popular version managers:

nvm - The bash-based manager most of the developers know about

fnm - The "Fast Node Manager" written in Rust

Volta - The JavaScript toolchain manager, also in Rust

Test Environment

Hardware: MacBook Pro 16" M3 Max with 48GB RAM

OS: macOS 15.5

Shells: bash 3.2.57, zsh 5.9 with oh-my-zsh

Node versions: 18.17.0, 20.10.0, and 24.3.0

The test matrix covered:

3 scenarios: shell startup, version switching, and real-world workflow

2 shells: bash (minimal) and zsh (with typical developer setup)

2 modes: cold (fresh process) and warm (pre-loaded parent shell)

3 Node versions: 18.17.0, 20.10.0, and 24.3.0

That's 36 different test combinations, each run multiple times for statistical accuracy.

Understanding cold vs warm

The distinction between cold and warm starts is important to understanding these benchmarks:

Cold start = What happens when you:

Open a new terminal window/tab

SSH into a server

Run a script that spawns a new shell

Execute commands in CI/CD pipelines

Use

git hooksthat invoke shell commands

Warm start = What happens when you:

Switch directories in an existing shell

Run commands in a subshell

$(...)or<(...)Execute scripts that inherit the parent shell's environment

Use

screenortmuxpanes that share a session

Here's what happens during each type of start:

NVM pays a huge tax on every cold start because it's implemented as shell scripts that must be parsed. FNM and Volta are compiled binaries that start instantly. Even in warm scenarios, NVM's shell functions are slower than calling compiled code.

The benchmarks

Now, we’re getting into the meat.

Shell startup overhead

The most basic benchmark is shell startup time:

Execution time:

The pattern is that NVM's shell script architecture creates significant overhead when initializing, while the Rust-based tools remain lean.

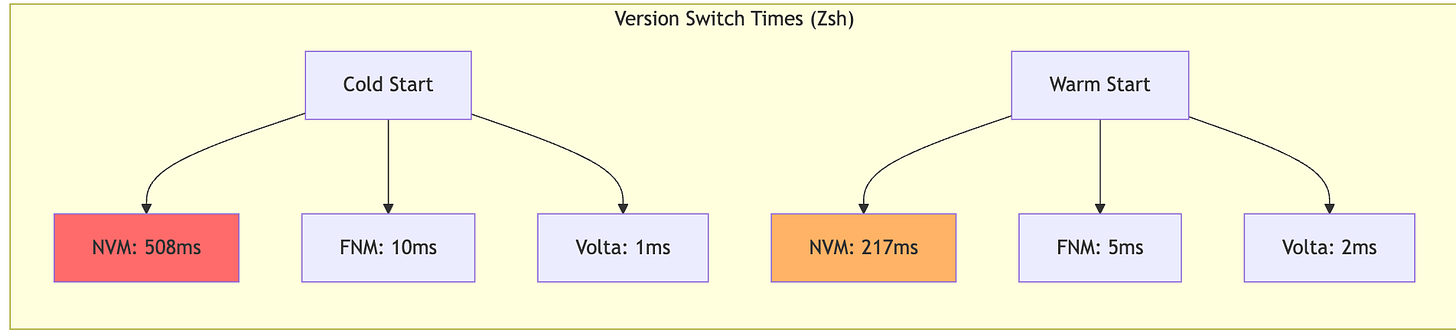

Version switching performance

Once initialized, how fast can each manager switch between Node versions?

Execution time:

Even in warm shells where the manager is pre-loaded, NVM takes 200ms or more to switch versions, while competitors complete the task in single-digit milliseconds. That's the difference between instant and noticeable.

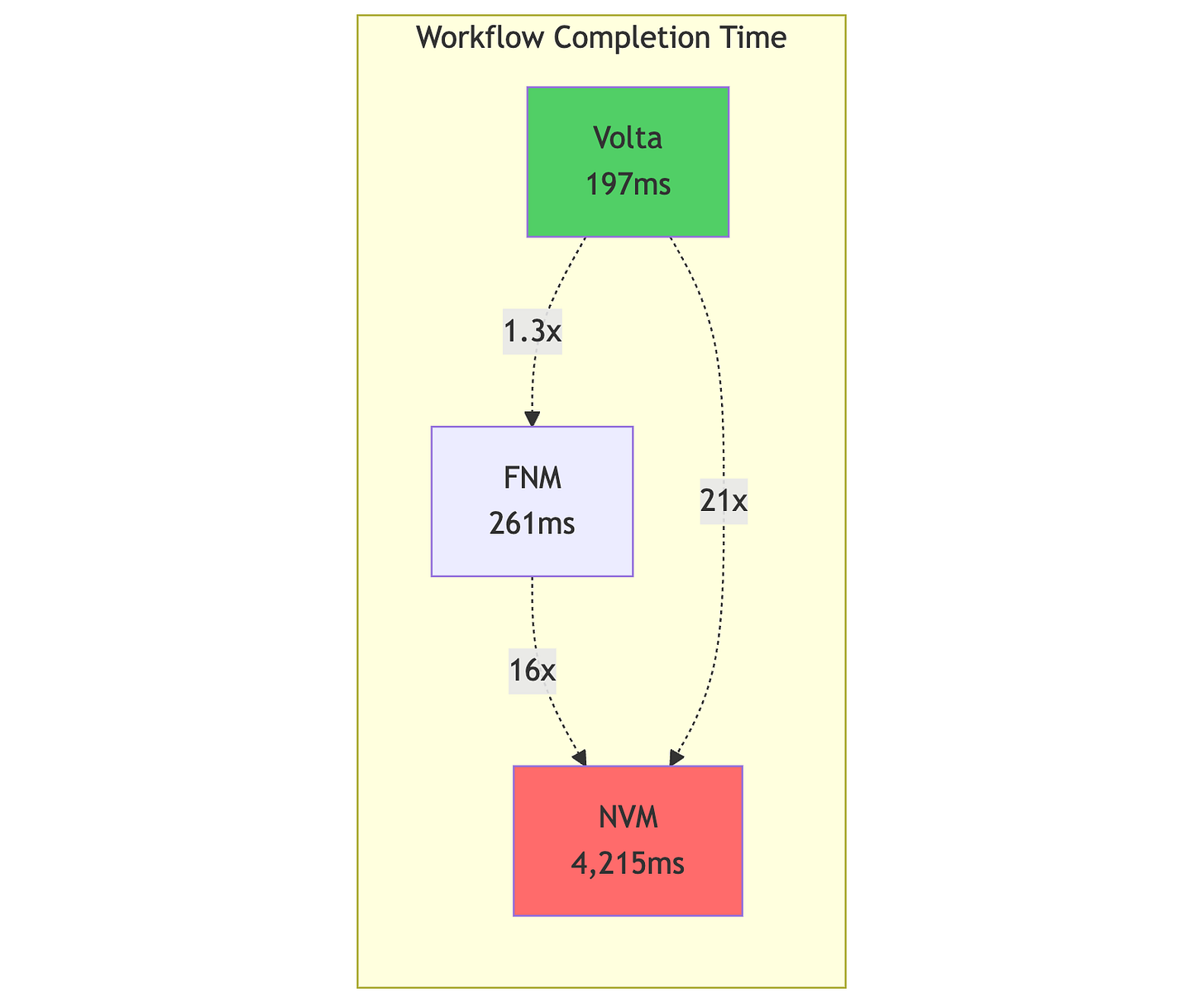

Real-world workflow impact

I simulated a typical development workflow:

Open a new shell

Navigate to Project A (Node 18)

Run a script

Navigate to Project B (Node 20)

Run tests

Navigate to Project C (Node 24)

Start dev server

Open 5 additional shells for various tasks

Total time for the complete workflow:

Execution time:

In practical terms, with NVM, this workflow takes over 4 seconds. With Volta, it's done in 200ms. Run this workflow 50 times a day, and NVM costs you over 3 minutes of waiting.

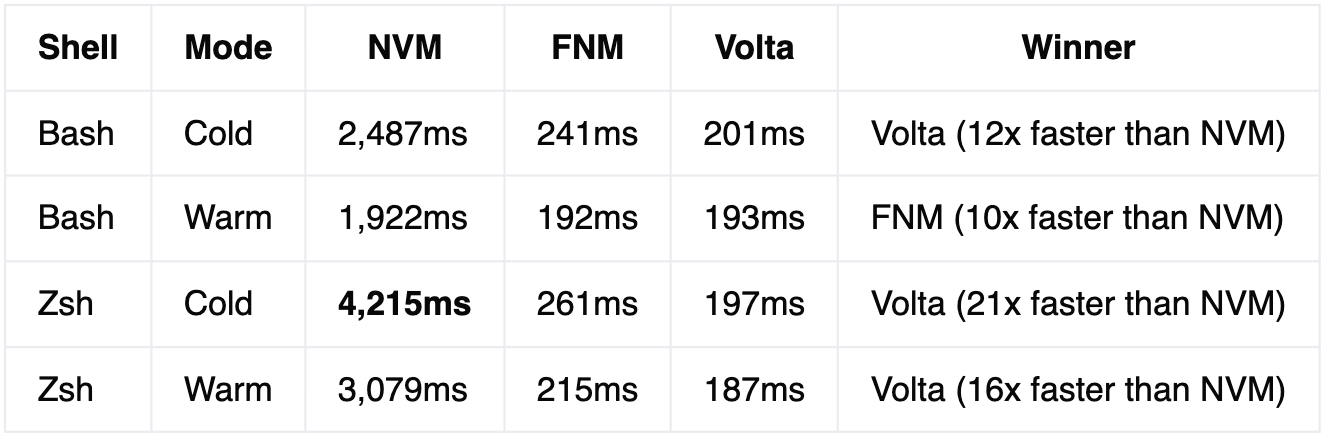

Key findings

Version-agnostic performance: Node 18, 20, and 24 performed identically within each manager. The version manager is the bottleneck, not Node itself.

Shell matters enormously: Bash users see minimal differences because bash itself is lightweight. Zsh users—especially with frameworks like oh-my-zsh—experience dramatic slowdowns with NVM.

Cold starts expose architecture: NVM parses hundreds of shell functions on every initialization. FNM and Volta use compiled binaries that start instantly.

Warm performance still matters: Even in pre-initialized shells, NVM's version switching is 40-100x slower than alternatives.

The compound effect: In workflows with multiple shell spawns (CI, automation, IDE terminals), NVM's overhead multiplies into minutes of daily waiting.

Why these numbers matter (and when they don't)

Let's put these benchmarks in perspective with real development scenarios:

The Daily Cost Calculator

Heavy Terminal User (Multiple projects, frequent context switching):

50 new shells/day (terminal tabs, tmux panes, VS Code terminals)

30 version switches/day (jumping between projects)

With NVM on Zsh: (50 × 543ms) + (30 × 217ms) = 33.6 seconds/day

With Volta on Zsh: (50 × 4ms) + (30 × 2ms) = 0.26 seconds/day

Daily savings: 33 seconds → 2.5 hours/month

Moderate Terminal User:

10 new shells/day

5 version switches/day

With NVM: 6.5 seconds/day wasted

With Volta: 0.05 seconds/day

Monthly savings: 30 minutes

Remote Development (SSH sessions, cloud dev environments):

Each SSH connection = cold start

Remote VS Code = multiple shell spawns

Git hooks and scripts = more cold starts

The overhead multiplies quickly in remote contexts

Breaking Down the Performance Gaps

Looking deeper at our benchmarks, some patterns become clear:

Architecture Matters More Than Features

All three managers support `.nvmrc` files

All three can auto-switch on directory change

But implementation (shell script vs compiled binary) creates 100-500x performance gaps

The Zsh Tax

NVM + Bash (cold): 2ms overhead

NVM + Zsh (cold): 542ms overhead

That's a 270x penalty just for using Zsh!

The reason? Zsh loads more initialization files, and NVM's shell functions interact poorly with Zsh's more complex parsing. If you're committed to NVM, switching to Bash could save you half a second per shell.

Warm Performance Still Matters

Even in long-lived shells, version switching shows dramatic differences:

NVM (warm): 148-217ms per switch

FNM (warm): 4-5ms per switch

Volta (warm): 2-3ms per switch

That 200ms difference is the boundary between "instant" and "did it freeze?" Every time you cd into a project directory, you're paying this tax.

The Psychological Factor

Beyond raw time, there's the user experience impact. Research shows:

<100ms feels instant

100-300ms is noticeable but acceptable

> 300ms feels sluggish and breaks flow

NVM consistently lands in the "sluggish" category, while FNM and Volta stay in "instant" territory. Your tools should disappear into the background, not remind you they exist with every interaction.

Making the switch

If you're convinced by the numbers, migration is straightforward:

From NVM to Volta:

# Install Volta

curl https://get.volta.sh | bash

# Install your Node versions

volta install node@18

volta install node@20

# Volta auto-switches based on package.jsonFrom NVM to FNM:

# Install FNM

brew install fnm

# Add to .zshrc (with the crucial --use-on-cd flag)

eval "$(fnm env --use-on-cd)"

# Import your existing versions

fnm install 18

fnm install 20The bottom line

The benchmarks don't lie: there's up to a 500x performance difference between Node.js version managers. But benchmarks also don't tell the whole story.

If you're feeling shell startup pain, switching from NVM to Volta or FNM will feel like getting a new computer. The difference is that dramatic. But if your workflow involves a few long-lived shells and you're happy with NVM's features, the performance gap might be minimal.

Choose your tools based on your workflow, not just numbers. But now you know: when your terminal feels slow, it might not be your imagination. It might just be 542 milliseconds of shell functions parsing every time you dare to open a new tab.

Want to run these benchmarks yourself? Check out the full test suite on GitHub and share your results. I'm especially curious about Windows and Linux performance patterns.

Love this kind of comparisons. Not sure how you got to this calculation though: 33 seconds/day → 2.5 hours/month. Unless your months have almost 273 working days, something is off there.

I love this kind of benchmark based on daily tasks. Thanks for sharing, Pavel!